Finally I got my 10 Gigabit connection working. It took many hours scattered over two months. From the uncertainty that the used hardware may have a bug, over driver to linux kernel issues. But all in all is a 10 GBit home network worth it?

To be honest I thought, I plug the NICs into both my server and PC, install the drivers and after not more than an hour it runs. At first it did.

But when I tried some speed-testing with samba, the connection froze after a few hundred megabytes.

The first weekend

The kernel messages on my server revealed at least a hint what was happening:

[ 136.232732] bna: enp1s0f2 link up [ 136.232740] bna: enp1s0f2 0 TXQ_STARTED [ 160.270896] bna: enp1s0f2 link down [ 160.270900] bna: enp1s0f2 0 TXQ_STOPPED [ 167.618942] bna: enp1s0f2 link up [ 167.618960] bna: enp1s0f2 0 TXQ_STARTED [ 169.779896] bna: enp1s0f2 link down [ 169.779901] bna: enp1s0f2 0 TXQ_STOPPED [ 177.635992] bna: enp1s0f2 link up [ 177.636000] bna: enp1s0f2 0 TXQ_STARTED [ 179.247408] bna: enp1s0f2 link down [ 179.247414] bna: enp1s0f2 0 TXQ_STOPPED

But literally nobody on the whole internet had ever posted a similar output. So at first I took the used Brocade 1020 into account. Since my Linux PC didn’t show any of those error, my first thought fell on the NIC in the server.

Therefore I switched both network cards. Still the error happened on the server side. Then I tested the connection with iperf. Sending from the server to the PC was no problem. But the reversed way, after a few seconds, the connection teared again. Enough for now…

The second weekend

A few weeks later.

I put an old Hard Drive into my server and temporarily installed CentOS 7.

And it worked at first attempt. So something is wrong with my OS. CentOS 7 uses the Linux Kernel version 3.10.x, and I used 4.1.3 with on Gentoo system.

But at least there was a glimpse of hope that the hardware was working. And the rest is just software.

Christmas and the days around New Year took the rest of my free time of 2015.

The third weekend

So already 2016… I hoped to finish this faster.

Since I knew that it’s a software issue, I did what Gentoo users do best. Compiling the Kernel. Many times..

The first success was the 3.14.56 Kernel with the Brocade firmware version 3.2.3.0.

The last Linux Kernel which uses this firmware is version 4.0.x.

As expected the 4.0.9 kernel worked. Both directions, speed >= 8 Gbit/s.

Since Kernel version 4.1.x the firmware version 3.2.5.1 is required. Again the connection tears when I try to send to my server.

So for now, I use the 4.0.9 Kernel.

Maybe there is some kind of bugfix in the future.

Conclusion

It’s difficult to draw conclusions about this project, because it took a lot of time to get it working and maybe is never supported by any newer Linux Kernel. But in late 2015 no other network solution delivers speeds up to 1 GB/s considering the price and the expandability or scaling of the network.

Of course you need SSDs and RAM caching to utilizes those speeds, but it isn’t a reason to stay at Gigabit-Ethernet networks just because your remaining hardware can’t deliver 1 GB/s. My ZFS-Z1 setup with 5 disks writes 350 MB/s and reads 450 MB/s and it would be foolish to restrict those speeds to around 110 MB/s.

All in all, if you can get two (used) 10 Gbit/s network cards, two SFP+ modules and a fiber cable for around 120€, and just want to connect two PCs, I would recommend this solution.

Someday switches with SFP+ slots will be reasonably priced and you can use the second SFP+ slot on the server NIC to connect to one of those switches. Like this many 1 GBit connections can be supplied simultaneous.

Lastly a one optimization to improve the network speed.

Little optimization

# iperf3 -c 192.168.178.21 -i 0 Connecting to host 192.168.178.21, port 5201 [ 4] local 192.168.178.11 port 34501 connected to 192.168.178.21 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-10.00 sec 9.66 GBytes 8.30 Gbits/sec 0 462 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 9.66 GBytes 8.30 Gbits/sec 0 sender [ 4] 0.00-10.00 sec 9.66 GBytes 8.30 Gbits/sec receiver iperf Done.

Add the following to /etc/sysctl.conf:

net.ipv4.tcp_timestamps = 0

and load this setting with:

sysctl -p

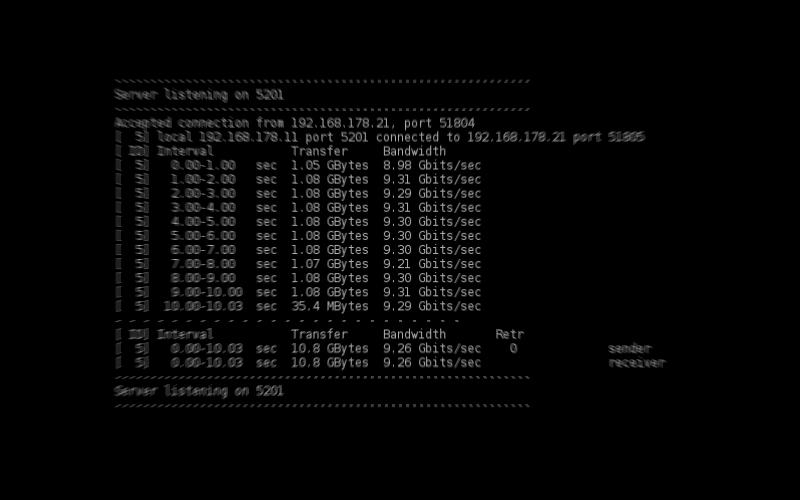

The connection speed increases by 1 Gbit/s:

# iperf3 -c 192.168.178.21 -i 0 Connecting to host 192.168.178.21, port 5201 [ 4] local 192.168.178.11 port 34553 connected to 192.168.178.21 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-10.00 sec 10.8 GBytes 9.30 Gbits/sec 0 512 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 10.8 GBytes 9.30 Gbits/sec 0 sender [ 4] 0.00-10.00 sec 10.8 GBytes 9.29 Gbits/sec receiver iperf Done.

Everything else, from increasing the buffer sizes, over enabling Jumbo Frames, to changing the congestion control, didn’t increase the network speed above the margin of error.

As always, feel free to comment, if you liked this post or have questions.